GRE Tunnels: Difference between revisions

No edit summary |

No edit summary |

||

| Line 1: | Line 1: | ||

At this point, the CityLab testbed only has limited support for ''automatically'' creating links between nodes using the JFed interface. Standard IP-in-IP GRE Tunnels (gre-tunnel links in JFed) between two nodes are currently supported. Adding support automatically creating Ethernet-in-IP GRE Tunnels (egre-tunnel links in JFed) as well as 'native' Ethernet links (lan links in JFed) is on our TODO list. | At this point, the CityLab testbed only has limited support for ''automatically'' creating links between nodes using the JFed interface. Standard IP-in-IP GRE Tunnels (gre-tunnel links in JFed) between two nodes are currently supported. Adding support automatically creating Ethernet-in-IP GRE Tunnels (egre-tunnel links in JFed) as well as 'native' Ethernet links (lan links in JFed) is on our TODO list. As a workaround it '''is''' possible to manually create ''egre-tunnel'' links between nodes, once the test is running. Once a tunnel has been established, it can be used just like any other Ethernet-interface except that it has a slightly lower MTU. | ||

As a workaround it '''is''' possible to manually create ''egre-tunnel'' links between nodes, once the test is running. Once a tunnel has been established, it can be used just like any other Ethernet-interface except that it has a slightly lower MTU. | |||

This page provides a quick How-To guide on how to create standard 'gre-tunnels' in JFed and then explains how to manually create 'egre-'tunnels. | This page provides a quick How-To guide on how to create standard 'gre-tunnels' in JFed and then explains how to manually create 'egre-'tunnels. | ||

Revision as of 11:43, 3 May 2018

At this point, the CityLab testbed only has limited support for automatically creating links between nodes using the JFed interface. Standard IP-in-IP GRE Tunnels (gre-tunnel links in JFed) between two nodes are currently supported. Adding support automatically creating Ethernet-in-IP GRE Tunnels (egre-tunnel links in JFed) as well as 'native' Ethernet links (lan links in JFed) is on our TODO list. As a workaround it is possible to manually create egre-tunnel links between nodes, once the test is running. Once a tunnel has been established, it can be used just like any other Ethernet-interface except that it has a slightly lower MTU.

This page provides a quick How-To guide on how to create standard 'gre-tunnels' in JFed and then explains how to manually create 'egre-'tunnels.

Creating Links between nodes through the JFed interface

Creating Links between nodes through the JFed interface is very straightforward and can be done as follows:

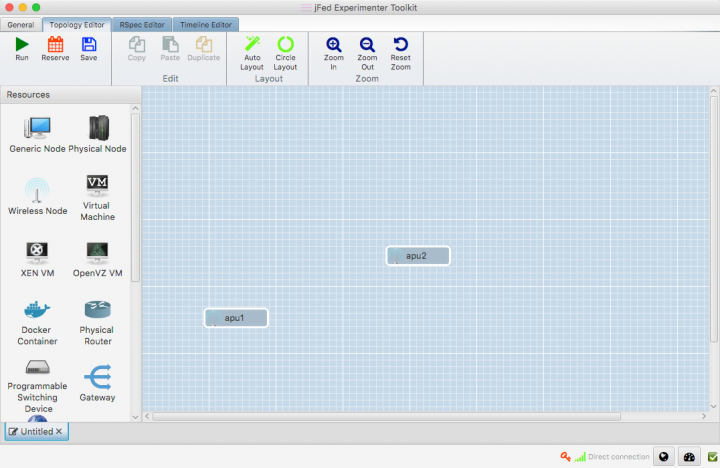

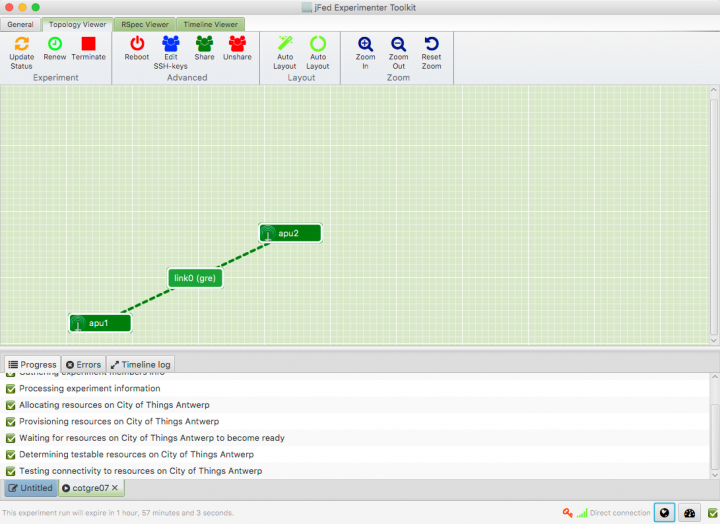

- Start JFed, click on 'New' and drag in two wireless nodes. For this tutorial we'll assume that these nodes are called apu1 and apu2

- Edit the node properties so both nodes are sourced from the City of Things Antwerp testbed. (By default Wilab2 is used).

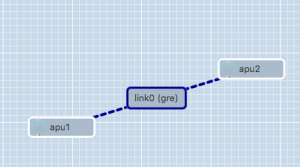

- Drag a line from apu1 to apu2. A link element connecting the two nodes is automatically created.

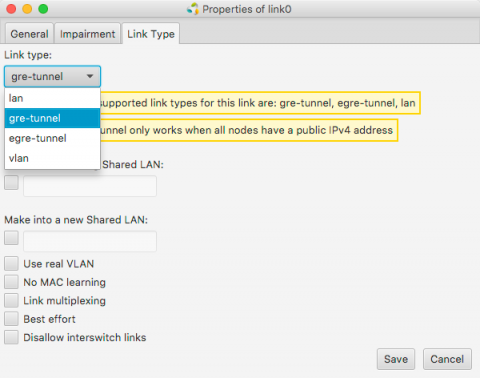

- If you are using JFed GUI 5.9 or above, the correct link type (gre) is already configured and shown in between braced after the name of the link. If this is not the case, the link-type needs to be manually configured:

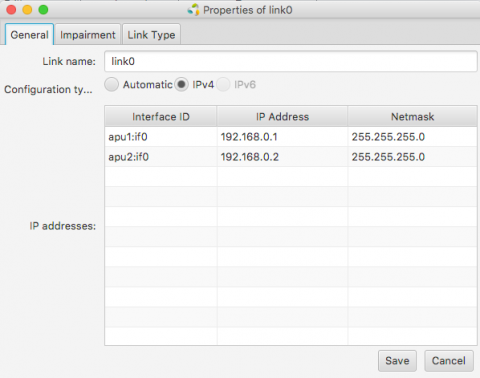

- JFed will automatically configure an IP-address on the tunnel interface for both nodes. optionally you can modify these IP-addresses in the General tab of the Link options-window

- Click 'Run' to start your slice. When all nodes are green, your experiment has started and the GRE-tunnel between the two nodes has been configured.

- SSH into the apu1 node.

- Run

ifconfig link02to verify that the GRE-tunnel has been created

user@apu1:~$ ifconfig link02

link02 Link encap:UNSPEC HWaddr 8F-81-55-10-00-00-80-3F-00-00-00-00-00-00-00-00

inet addr:192.168.0.1 P-t-P:192.168.0.1 Mask:255.255.255.0

inet6 addr: fe80::200:5efe:8f81:5510/64 Scope:Link

UP POINTOPOINT RUNNING NOARP MTU:1434 Metric:1

RX packets:2 errors:0 dropped:0 overruns:0 frame:0

TX packets:3 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1

RX bytes:112 (112.0 B) TX bytes:168 (168.0 B)

- Ping the apu2-tunnel enpoint by running

ping -c 4 <ip>where<ip>is the IP-address configured for the tunnel endpoint on apu2. If you have not changed this, this is192.168.0.2

user@apu1:~$ ping -c 4 192.168.0.2 PING 192.168.0.2 (192.168.0.2) 56(84) bytes of data. 64 bytes from 192.168.0.2: icmp_seq=1 ttl=64 time=2.21 ms 64 bytes from 192.168.0.2: icmp_seq=2 ttl=64 time=1.60 ms 64 bytes from 192.168.0.2: icmp_seq=3 ttl=64 time=1.46 ms 64 bytes from 192.168.0.2: icmp_seq=4 ttl=64 time=1.51 ms --- 192.168.0.2 ping statistics --- 4 packets transmitted, 4 received, 0% packet loss, time 3004ms rtt min/avg/max/mdev = 1.464/1.699/2.218/0.303 ms

Manually setting up EGRE-tunnels

A number of scripts are provided to make it as easy as possible to create and manage EGRE-tunnels. Before any EGRE-tunnels can be created, these scripts first need to be installed on every node. This can be done by running the following one-liner:

wget -O- https://doc.lab.cityofthings.eu/w/images/9/93/Gre-utils.tar.gz | sudo tar -C /usr/local/ -zxvf -

This command will

- Download the Gre-utils.tar.gz archive

- Install the scripts in

/usr/local

Creating a link between two nodes

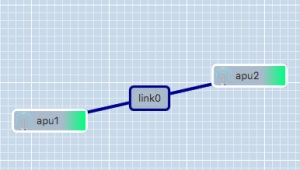

A simple GRE tunnel can be created by running the gre_add_tunnel script on both nodes.

The setup shown in the example on the right can be created as follows:

- On apu1 run:

sudo gre_add_tunnel -c 192.168.0.1/24 link0 apu2 - On apu2 run:

sudo gre_add_tunnel -c 192.168.0.2/24 link0 apu1

In the above commands link0 is the name of the interface to create and apuX is the host to create a tunnel to. The optional -c flag can be used to automatically configure an IPv4-address on the newly created interface.

To allow the node names configured in JFed to be used to configure GRE tunnels, the gre_add_tunnel script tries to behave somewhat intelligently when resolving hostnames:

- If the specified host is a Fully Qualified Domain Name (i.e: it contains a '.'), a regular DNS query is performed

- If the specified host is a simple hostname (no '.' in the hostname), the

gre_add_tunnelscript first tries to resolve the specified host to a node name specified in JFed. If this fails, the IP-address of the host is resolved using a normal DNS query. - If the specified host is a valid IPv4 address, no DNS-resolution is performed.

Once the GRE tunnel is created, it can be used just like any other Ethernet interface, except that the MTU is a bit lower than normal.

Removing the tunnel is done using the gre_del_tunnel script.

To remove the tunnels created above for example, the following command would have to be run on each node:

sudo gre_del_tunnel link0

Creating a link between three or more nodes

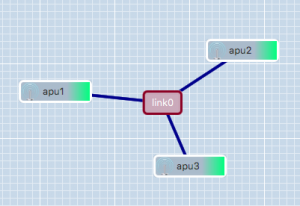

Creating a link between more than two nodes is (slightly) more complicated since GRE only allows Point-to-Point tunnels to be created. To work around this, GRE Tunnels are created from one 'central' node to all other nodes on the link. These GRE Tunnels are then 'bridged' together on the central node to form a shared subnet. Such a 'GRE Bridge' can easily be created using the gre_add_bridge script.

The 3-node setup shown in the example on the right can be created as follows:

- On apu1' run:

sudo gre_add_bridge -c 192.168.0.1/24 link0 apu2 apu3

As for thegre_add_tunnelscript,link0is the name of the interface to create and the code>-c flag can optionally be used to configure an IPv4 address on the interface. The remaining parameters, in this caseapu2andapu3are the hosts to connect to. The resolution of these hostnames to IP-addresses is done in exactly the same way as for thegre_add_tunnelscript.

- On apu2 run:

sudo gre_add_tunnel -c 192.168.0.2/24 link0 apu1 - On apu3 run:

sudo gre_add_tunnel -c 192.168.0.3/24 link0 apu1

- On apu2 run:

In this case apu1 is used as the central node and apu2 and apu3 connect to it in exactly the same way as in the two node scenario.

Once the 'GRE Bridge' has been created it can be used just like any other linux bridge. Additional interfaces can be added/removed using the brctl command. The bridge's stastus can be queried using the brctl show command:

user@apu1:~$ brctl show link0 bridge name bridge id STP enabled interfaces link0 8000.a2b74a105a76 no link0_gre_0 link0_gre_1

In the above output, the link0_gre_x interfaces are the GRE tunnels to the individual nodes.

Removing the 'GRE Bridge' is done using the gre_del_bridge_script. It is highly recommended to use this script to remove the bridge (rather than the brctl command) as this script not only removes the bridge itself, but also cleans up the GRE-tunnels to the individual nodes.

To remove the bridge created in the above example the following command would have to be run

- On apu1:

sudo gre_del_bridge link0

Once the 'GRE Bridge' has been removed, the GRE-Tunnels on the other nodes also need to be cleaned up. This is done in exactly the same way as in the two node scenario:

sudo gre_del_tunnel link0

Limitations

Single tunnel between two nodes

GRE Tunnels are identified solely on the source and destination IP-address of the encapsulating packet. That means that it is not possible to establish two independent tunnels between the same two nodes at the same time.

For the two node scenario, this means that only a single link can be created between two nodes.

For the mutliple nodes scenario, a node can participate in multiple links as long as:

- each link uses a different 'central node'

- each 'central node' is only part of one link at the same time

It should be noted however, that GRE Tunnels can be used in combination with VLAN-tagging, so if you really need to create multiple links between two nodes, it is possible to do so, but this is not something that can be done using the scripts provided here.

Reduced MTU

Using a GRE Tunnel incurs an overhead of 38 bytes. This is because the original Ethernet packet needs to be prefixed with an additional IP- and Ethernet-header in order to send it to the other tunnel endpoint. To prevent fragmentation, the linux kernel automatically reduces the MTU of the GRE-interface. As a result, the MTU inside the tunnel is 38 bytes lower than the MTU outside the tunnel.

Except for a small decrease in performance this usually is not that big of a problem, with one notable exception. When, for instance, a WiFi AP-interface is bridged to a GRE Tunnel, the MTU is set to the smallest MTU of the 'slave' interfaces (in this case the GRE-tunnel). This lower MTU however is not automagically communicated to the connected WiFi clients and as a result these clients may still send packets that are larger than the MTU of the bridge. Since these packets are subsequently dropped by the linux bridge, this can lead to all sorts of nasty problems. The most common of which are dropped UDP packets and TCP sessions seem to work fine and then suddenly hang when more than a few bytes at a time are sent over them.

To resolve this issue the MTU of the other WiFi clients should be reduced to the MTU of the linux bridge. When static IP-addresses are used, this can easily be done using the ip link command:

ip link set mtu <mtu> dev <device>

If DHCP is used, the lower MTU should be advertised by the DHCP server.